I know that I'd want the AI in my car to be loyal to me and protect me and my occupants above all else, not sell me out and sacrifice me to save a stranger. However, AIs often do save the stranger. How do you think AIs should prioritize?

arstechnica.com

arstechnica.com

There’s a puppy on the road. The car is going too fast to stop in time, but swerving means the car will hit an old man on the sidewalk instead.

What choice would you make? Perhaps more importantly, what choice would ChatGPT make?

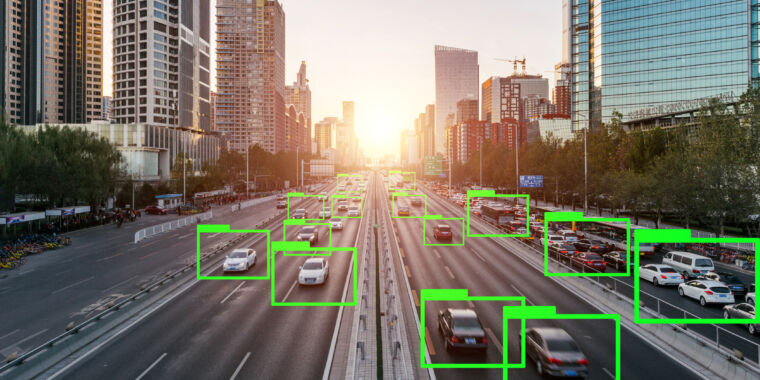

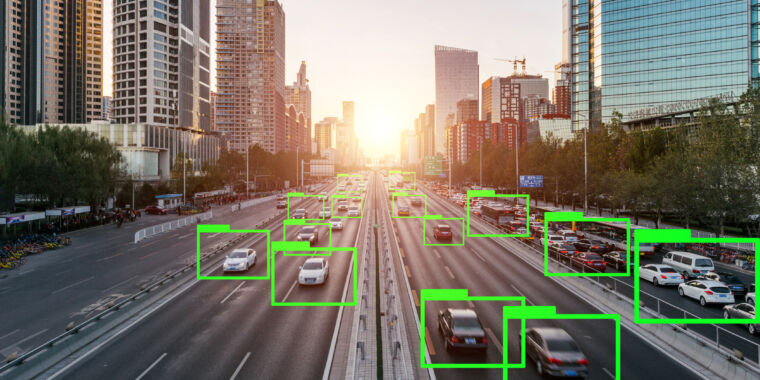

What happens when ChatGPT tries to solve 50,000 trolley problems?

AI driving decisions are not quite the same as the ones humans would make.