- Joined

- 4 Jun 2021

- Messages

- 5,182 (4.51/day)

Written in 1991, this will be of interest to programmers and fellow nerds who want to know more about floating point calculations on computers. It's all about the inevitable rounding error that happens with floating point calculations and how to handle it.

Devoid of pictures or any decent formatting, this is hardcore information on this subject. I dare say this will make excellent bedtime reading, curing insomnia in no time.

Nerd level: root 2.

Tip: you might want to copy the text into Word or something to make the presentation more readable.

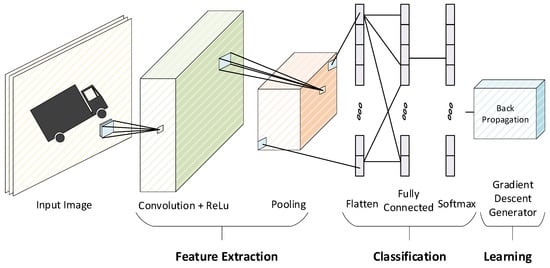

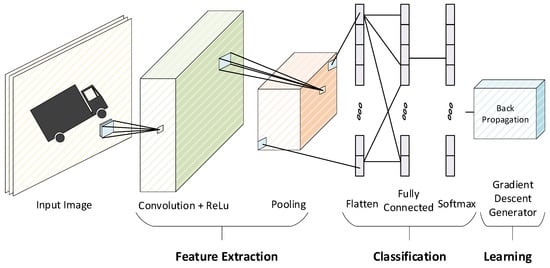

Here's a random flowing point picture from the internet to make up for the lack of article pictures and its source:

www.mdpi.com

www.mdpi.com

Devoid of pictures or any decent formatting, this is hardcore information on this subject. I dare say this will make excellent bedtime reading, curing insomnia in no time.

Nerd level: root 2.

Floating-point arithmetic is considered an esoteric subject by many people. This is rather surprising because floating-point is ubiquitous in computer systems. Almost every language has a floating-point datatype; computers from PCs to supercomputers have floating-point accelerators; most compilers will be called upon to compile floating-point algorithms from time to time; and virtually every operating system must respond to floating-point exceptions such as overflow. This paper presents a tutorial on those aspects of floating-point that have a direct impact on designers of computer systems. It begins with background on floating-point representation and rounding error, continues with a discussion of the IEEE floating-point standard, and concludes with numerous examples of how computer builders can better support floating-point.

Tip: you might want to copy the text into Word or something to make the presentation more readable.

Here's a random flowing point picture from the internet to make up for the lack of article pictures and its source:

Optimal Architecture of Floating-Point Arithmetic for Neural Network Training Processors

The convergence of artificial intelligence (AI) is one of the critical technologies in the recent fourth industrial revolution. The AIoT (Artificial Intelligence Internet of Things) is expected to be a solution that aids rapid and secure data processing. While the success of AIoT demanded...