It might surprise you, but there's no such thing as an analog output from a digital computer. The output remains digital, no matter what.

This might seem a strange thing to say when there are things like an "analog" audio port on a sound card for connecting to analog speakers, or an "analog" VGA port on older graphics cards for connecting to an analog CRT monitor, but it's true. What they really output are analog compatible digital signals. Allow me to explain.

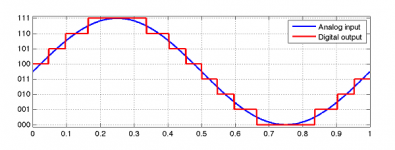

Your regular PC, smartphone, games console etc, being digital, works on the base 2* (binary) number system, so any analog inputs from the real world have to first be digitised by an analog to digital converter (ADC). This means that the signal is now represented by a stream of numbers. After this point there's no going back, even when the signal is converted back to "analog" with a digital to analog converter (DAC). All that actually does is make the digital signal compatible with analog equipment by converting the number base to a higher one, that's it. The exact number base used depends on the resolution, or bit depth of the DAC.

To understand why, let's look at a single CD quality audio channel: that's a 16-bit amplitude resolution with a sampling rate of 44.1KHz.

General analog to digital conversion. Picture: source

That 16 bits has 2^16 possible values, meaning that the signal amplitude, or loudness, can only occupy one of 65536 discrete levels with a value between 0 and 65535 and that's exactly what you get out of a DAC. Also, that value can only change at a maximum rate of 44100 times a second, rather than continuously, so it's still digital in the time domain, too. Hence, all that's happened is that the DAC has converted the base 2 instantaneous value of the signal to base 65536, so that the number, represented by a variable voltage, never goes into the next counting column (think 10s, 100s, 1000s in our familiar base 10 counting system and 2s, 4s, 8s in base 2 counting) which allows analog equipment to be compatible with the computer.

The same is true for that VGA analog CRT compatible output. For 24-bit colour, it's 8-bits per colour, so, taking just one colour out of the three (red, green and blue), 2^8 means that the signal, represented by a variable voltage, can only occupy one of 256 discrete levels with a value between 0 and 255 and that's what you see on the monitor. The signal is still digital, but simply converted to base 256.

So, if the signal is still digital, why is it that a modern LCD monitor will show an inferior picture when connected via VGA than with HDMI, DVI etc even though it's still being driven at its native resolution? One word: mistracking. The ADC in the monitor does not 100% accurately track the values output by the DAC in the graphics card, therefore, the values it reads are slightly wrong, reducing picture quality.

This is because a great deal more accuracy is required to read a signal that could take one of 256 possible values rather than one which can only take one of two values, 0 or 1. To achieve this level of accuracy over the VGA connection would no doubt increase the cost of the monitor with more expensive components and more elaborate calibration being required which wouldn't be worth it when simply leaving out the unnecessary DAC - ADC conversion eliminates the problem. It's also quite possible that the DAC in the graphics card isn't too accurate either, compounding the problem.

So, have I convinced you that there's no such thing as an analog output from a digital computer? Let us know your thoughts in the comments below, but I especially want to hear from you if you think I'm wrong and why, which should make it rather interesting.

Digital signal processing is a massive subject and I've only touched on a basic aspect of it that people don't normally think about, so check out the links below for more information.

Finally, look out for my upcoming article on why all digital computers and devices are really analog machines just being made to work digitally. Clue: it's really simple. See if you can figure it out before you read the article.

Read all about ADC and DAC signal conversion on Wikipedia. The basics of number bases are explained at Purplemath.

*Other number bases are technically possible and have been used, eg base 3, but base 2 is the most suitable for most situations, hence virtually all computers use it nowadays, regardless of their design or cost, from the biggest supercomputers to a calculator. This even includes quantum computers which just add a superposition state of 0 and 1 for that quantum